Tracking pedestrians in crowds is very difficult due to the high level of inter-pedestrian occlusions. Tracking rectangular pedestrian bounding boxes in such environment is therefore almost impossible. In this research, we looked at real-time motion-based segmentation techniques to be used to track only regions that belong to the targeted object. We focus on one stage of the tracking system where a segmentation is propagated from frame to frame without intermediary pedestrian detection. For this research we focus on two metrics: the time before failure without re-detection and the quality of the propagated segmentation. The dataset used for evaluation is available for download on this website.

00-crossing-300.avi - Video [3.7MB]: Input video, 300 first frames from 879-38_l.mov. Extracted from the UCF Crowd Dataset.

01-crossing-edge-300.avi - Video [13MB]: Ouput of a simple canny edge detector on the video. A human can track pedestrian easily without texture information.

02-crossing-groundtruth.avi - Video [3.5MB]: Video of the ground-truth segmentation provided for 10 people.

03-crossing-compressive-tracking-300.avi - Video [3.1MB]: Video of tracking results obtained with the Compressive Tracking method [1].

04-crossing-two-gran-tracking-300.avi - Video [3.5MB]: Video of tracking results obtained with the Two-Granularity Tracking method [2].

05-crossing-ours-id1.avi - Video [6.6MB]: Video of tracked pixels for one individual using our method

06-crossing-ours-all.avi - Video [7.7MB]: Video of tracking results obtained with our method.

07-crossing-ours-segmentation-error.avi - Video [9.1MB]: Video of Segmentation errors observed with our method (True Positive is blue, False Positive is red).

[1] Kaihua Zhang, Lei Zhang, and Ming-Hsuan Yang. Real-time compressive tracking. In ECCV12, pages 864-877, 2012.

[2] Katerina Fragkiadaki, Weiyu Zhang, Geng Zhang, and Jianbo Shi. Two-granularity tracking: Mediating trajectory and detection graphs for tracking under occlusions. In ECCV12, pages 552-565, 2012.

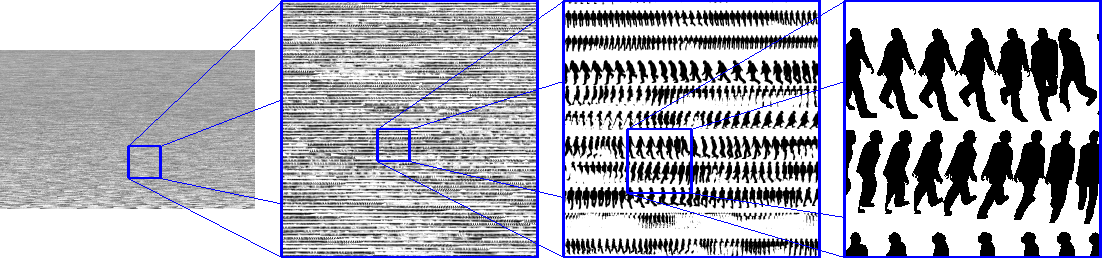

Pedestrian dense segmentation in complex scene is very difficult and time consuming to acquire manually. Since pedestrian shape priors are needed in many applications, a synthetic ground-truth dataset was constructed from simulated crowds. The 1.8 million silhouettes dataset can be downloaded on this page.

|

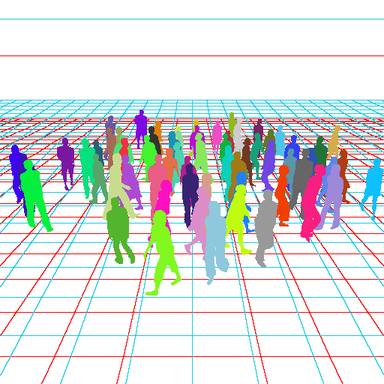

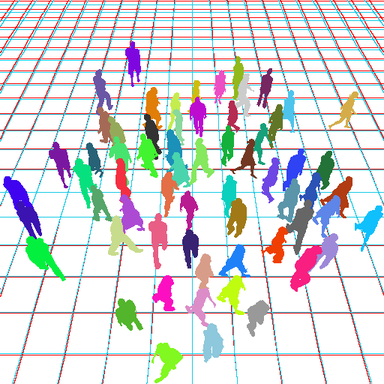

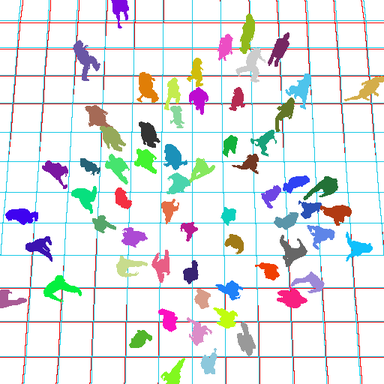

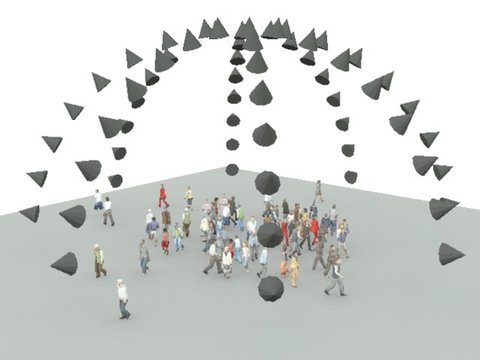

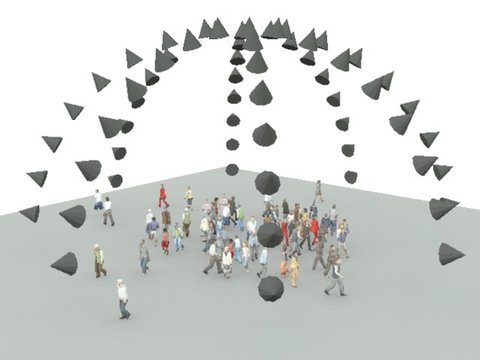

The scene shows 64 people (4 groups of 16) coming from the four branches of a cross-shape intersection and going to the opposite branch. In the middle of the crossing, a tangled pattern emerges as each pedestrian is trying to find his way through the crowd. The scene is captured using 64 different cameras regularly positioned around the crowd.

|

The dataset of extracted silhouettes is composed of 2x 903,103 = 1,806,206 masks (each mask and its symmetric). 808,666 of the 1.8 million silhouettes are non-occluded.

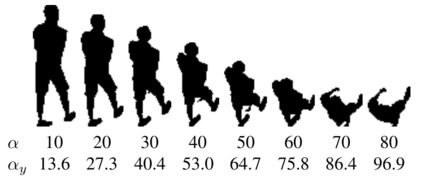

While generating the pedestrian shape dataset a question occurred: Since people have different shapes depending on the camera view angle, can the camera position be retrieved using this information? In this research we present an experiment on synthetic data to try answer this simple question.

|

Our silhouettes dataset have been split into two disjoint sets for training and testing. During testing we learned for a simple shape feature descriptor the association between shapes and view angles. We retrieved approximate angles for each testing query shape using a simple nearest neighbor search approach in the shape feature space. Example of plane reconstruction using our results are given below. Please refer to the paper for more details.